Selection Bias and Confirmation Bias

http://www.humantruth.info/selection_bias.html

By Vexen Crabtree 2017

- Poor Sampling Techniques

- Case Examples

- Social Media Filter Bubbles

- Systematic Literature Review and Meta-Analysis - Overcoming Selection Bias

- Other Sources of Thinking Errors

- Links

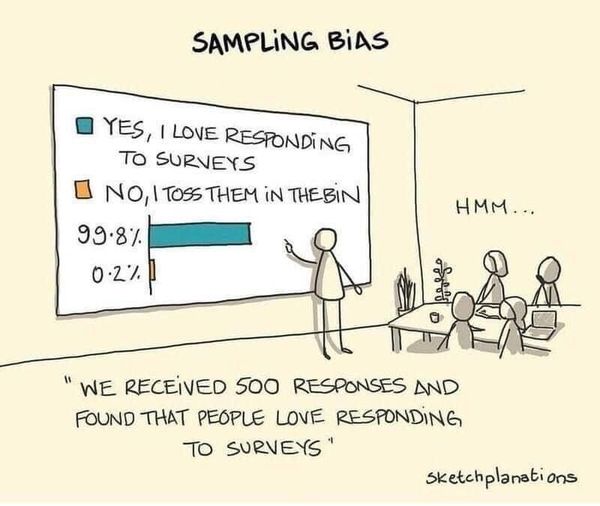

1. Poor Sampling Techniques

“It is the peculiar and perpetual error of the human understanding to be more moved and excited by affirmatives than negatives.”

Francis Bacon (1620)1

Selection Bias is the result of poor sampling techniques2 whereby we use partial and skewed data in order to back up our beliefs. It includes confirmation bias which is the unfortunate way in which us Humans tend to notice evidence that supports our ideas and we tend to ignore evidence that contradictions it3,4 and actively seek out flaws in evidence that contradicts our opinion (but automatically accept evidence that supports us)5,6. We are all pretty hard-working when it comes to finding arguments that support us, and when we find opposing views, we work hard to discredit them. But we don't work hard to discredit those who agree with us. In other words, we work at undermining sources of information that sit uncomfortably with what we believe, and we idly accept those who agree with us.

“If we believe a correlation exists, we notice and remember confirming instances. If we believe that premonitions correlate with events, we notice and remember the joint occurrence of the premonition and the event's later occurrence. We seldom notice or remember all the times unusual events do not coincide. If, after we think about a friend, the friend calls us, we notice and remember the coincidence. We don't notice all the times we think of a friend without any ensuing call, or receive a call from a friend about whom we've not been thinking.

People see not only what they expect, but correlations they want to see. This intense human desire to find order, even in random events, leads us to seek reasons for unusual happenings or mystifying mood fluctuations. By attributing events to a cause, we order our worlds and make things seem more predictable and controllable. Again, this tendency is normally adaptive but occasionally leads us astray.”

"Social Psychology" by David Myers (1999)3

Our brains are good at jumping to conclusions, and it is often hard to resist the urge. We often feel clever, even while deluding ourselves! If a bus is late twice on a row on Tuesdays whilst we are stood there waiting for it, we try to work out the cause. We would do much better to accurately note on how many other days it is late, and note how many times it isn't late on a Tuesday. But rare is the person who engages methodically in such trivial investigations. We normally just go with the flow, and think we have arrived at sensible conclusions based on the data we happen to have observed in our own little bubble of life.

All of this is predictable enough for an individual, but another form of Selection Bias occurs in mass media publications too. Cheap tabloid newspapers publish every report that shows foreigners in a bad light, or shows that crime is bad, or that something-or-other is eroding proper morality. And they mostly avoid any reports that say the opposite, because such assuring results do not sell as well. Newspapers as a whole simply never report anything boring - therefore they constantly support world-views that are divorced from everyday reality. Sometimes commercial interests skew the evidence that we are exposed to - drugs companies conduct many pseudo-scientific trials of their products but their choice of what to publish is manipulative - studies in 2001 and 2002 shows that "those with positive outcomes were nearly five times as likely to be published as those that were negative"7. Interested companies just have to keep paying for studies to be done, waiting for a misleading positive result, and then publish it and make it the basis of an advertising campaign. The public have few resources to overcome this kind of orchestrated Selection Bias.

RationalWiki uses mentions that "a website devoted to preventing harassment of women unsurprisingly concluded that nearly all women were victims of harassment at some point"8. And another example - a statistic that is most famous for being wrong, is that one in ten males is homosexual. This was based on a poll done on the community surrounding a gay-friendly publication - the sample of respondents was skewed away from the true average, hence, misleading data was observed.

The only solution for these problems is the proper and balanced statistical analysis, after actively and methodically gathering data. Alongside raising awareness of Selection Bias, Confirmation Bias, and other thinking errors. This level of critical thinking is quite a rare endeavour in anyone's personal life - we don't have time, the inclination, the confidence, or the skill, to properly evaluate subjective data. Unfortunately fact-checking is also rare in mass media publications. Personal opinions and news outlets ought to be given little trust.

“When examining evidence relevant to a given belief, people are inclined to see what they expect to see, and conclude what they expect to conclude. Information that is consistent with our pre-existing beliefs is often accepted at face value, whereas evidence that contradicts them is critically scrutinized and discounted. Our beliefs may thus be less responsive than they should be to the implications of new information.”

"How We Know What Isn't So: The Fallibility of Human Reason in Everyday Life" by Thomas Gilovich (1991)9

2. Case Examples

2.1. The Evidence for Supernatural Powers

Take a very simple example provided by skeptical thinker Robert Todd Carroll in "Unnatural Acts: Critical Thinking, Skepticism, and Science Exposed!" (2011)10: "If two psychics pick opposite winners in an athletic contest, one of them may appear to have more knowledge that the other, but the appearance is an illusion". If both published their prediction in a newspaper a week before the event, you can guarantee that the paper that had (by luck) hosted the correct prediction is more likely to run a second article announcing that the psychic was correct. The other paper is very unlikely to run an article and say that the psychic was wrong: they'll simply ignore it and move on to more interesting topics. The poor public, therefore, only ever read of success stories, and are therefore misled into thinking that evidence exists for psychic fortune-telling, when in fact it really is pure luck.

“Let us imagine that one hundred professors of psychology throughout the country read of Rhine's work and decide to test a [human subject for signs of ESP capability]. The fifty who fail to find ESP in their first preliminary test are likely to be discouraged and quit, but the other fifty will be encouraged to continue. Of this fifty, more will stop work after the second test, while some will continue because they obtained good results. Eventually, one experimenter remains whose subject has made high scores for six or seven successive sessions. Neither experimenter nor subject is aware of the other ninety-nine projects, and so both have a strong delusion that ESP is operating. The odds are, in fact, much against the run. But in the total (and unknown) context, the run is quite probable. (The odds against winning the Irish sweepstakes are even higher. But someone does win it.) So the experimenter writes an enthusiastic paper, sends it to Rhine who publishes it in his magazine, and the readers are greatly impressed.

At this point one may ask, "Would not this experimenter be disappointed if he continues testing his subject?" The answer is yes, but as Rhine tells us, subjects almost always show a marked decline in ability after their initial successes.”

"Fads & Fallacies in the Name of Science" by Martin Gardner (1957)11

2.2. Was the Exam Fair?12

In "How We Know What Isn't So: The Fallibility of Human Reason in Everyday Life" by Thomas Gilovich (1991)13 the author proposes that a student who fails an exam will often say the exam was unfair. They will recall questions that were ambiguous (a feature of all exams, it seems). If some are found, then they think their accusation is accurate. If not, they will then weigh the content of the exam against what they were taught in their lectures. Any discrepancy will result in hir believing the exam was unfair. Failing that, they will ask other students and if others agree, then again, they think they are correct in believing the exam was unfair. There are many possible places in which to look for confirming evidence.

Compare this to a student who passes: Such a student does not search for the reasons for the pass in external circumstances. They do not think the test unfair, do not search for areas of subject matter that do not match with the test, and do not think the test unfair 'just because' another student thinks so. This student needs convincing it is unfair and doesn't search for such evidence hirself.

“By considering a number of different sources of evidence and declaring victory whenever supportive data are obtained, the person is likely to end up spuriously believing that his or her suspicion is valid. [...] When the initial evidence supports our preferences, we are generally satisfied and terminate our search: when the initial evidence is hostile, however, we often dig deeper, hoping to find more comforting information, or to uncover reasons to believe that the original evidence was flawed. By taking advantage of 'optional stopping' in this way, we dramatically increase our chances of finding satisfactory support for what we wish to be true.”

"How We Know What Isn't So: The Fallibility of Human Reason in Everyday Life" by Thomas Gilovich (1991)14

3. Social Media Filter Bubbles15

Despite the general idea that the Internet increases access to information, there is worry that the way many social media platforms work is making Selection Bias worse. An article in The Times worries about the way social media is "pushing news to users based on what they have previously like and screening out alternative points of view" and allowing bubbles of extremism to go unchallenged16. Likewise, in 2011 the thought-provoking series of TED Talks hosted Eli Pariser17, who warned about online "filter bubbles", wherein we become surrounded by automated feeds catered for us, and conversely, filtering out things we haven't liked. His book on the topic has been widely acclaimed by sociologists. In other words, social media algorithms and trying-to-be-clever news websites are hiding things from us if it thinks we don't agree or aren't interested. This new form of externalized selection-bias makes it even less likely for us to realize that our beliefs and viewpoints ought to be always to be challenged.

Platforms such as Facebook have replied (see the links, below) saying that much of this is due to user choice - people are shown feeds as a result of the actions of their friends, and, their friends often share their opinions. But the solution is easy: Make sure news feeds are scattered around more freely. Attempting to make us 'happy' by only showing us news articles shared by friends isn't good for us. To defeat selection bias, we also have to be shown news articles that are specifically not popular amongst our friends.

"Your Filter Bubble is Destroying Democracy" by Mostafa M. El-Bermawy on wired.com (2016 Nov 18).

"How Social Media Filter Bubbles and Algorithms Influence the Election" in The Guardian18 (2017 May 22).

"Did Facebook's Big Study Kill My Filter Bubble Thesis?" by Eli Pariser on Wired.com (2015 May 07).

4. Systematic Literature Review and Meta-Analysis - Overcoming Selection Bias12

#science #scientific_method #selection_bias #thinking_errors

To prevent errors it is best to conduct a methodical approach in evaluating evidence. In the scientific method this is called Systematic Review or Literature Review. The combining of statistical data from experiments into a single data-set is called a meta-analysis, a process which makes statistical analysis more accurate19,20. Specific search criteria are laid out before any evidence is sought and each source of information is methodically judged according to pre-set criteria before looking at the results. This eliminates, as much as possible, the human bias whereby we subconsciously find ways of dismissing evidence we don't agree with. Once we've done that, then we tabulate the results of each source, and it is revealed to us which sources confirm or disconfirm our idea. The Cochrane Collaboration does this kind of research on healthcare subjects and as a result of its gold-standard methodical approach they have "saved more lives than you can possibly imagine"21. Systematic Literature Review is thus one of the most important checks-and-balances of the scientific method.

For more, see:

- "Systematic Literature Review and Meta-Analysis - Overcoming Selection Bias" by Vexen Crabtree (2017).

5. Other Sources of Thinking Errors

- Cognitive and Internal Errors

- Pareidolia: Seeing Patterns That Aren't There

- Selection Bias and Confirmation Bias

- Blinded by the Small Picture

- The Forer (or Barnum) Effect

- We Dislike Changing Our Minds: Status Quo Bias and Cognitive Dissonance

- Physiological Causes of Strange Experiences

- Emotions: The Secret and Honest Bane of Rationalism

- Mirrors That We Thought Were Windows

- Social Errors

- The False and Conflicting Experiences of Mankind

- Commentaries

- The Prevention of Errors: Science and Skepticism